Accelerated DataOps processes

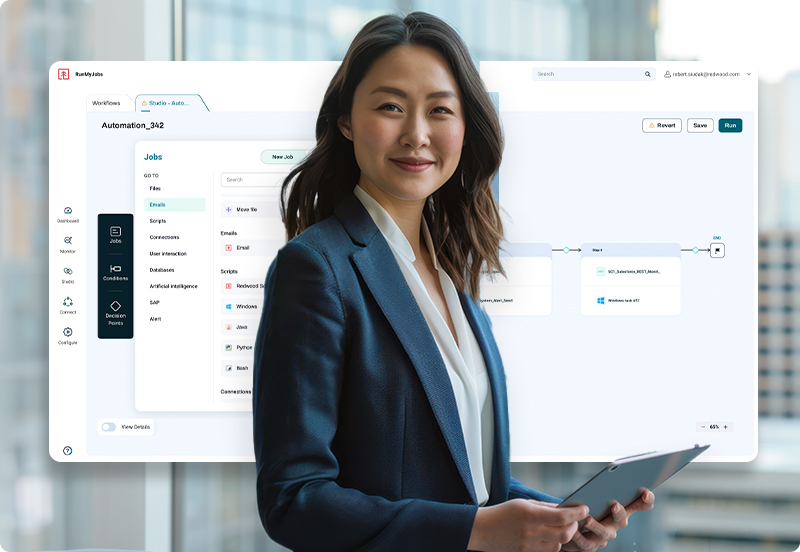

RunMyJobs by Redwood enables data teams to gain control over DataOps workflows to ensure full visibility into data freshness and lineage.

Tame data chaos

Support continuous delivery of high-quality data with the right data orchestration and automation platform.

-

Consistent analytics pipelines

Apply comprehensive workflow orchestration to make your development and analytics teams more collaborative and effective. -

A unified data landscape

With RunMyJobs as your DataOps tool, no system is out of reach. Connect your business and IT services to your DataOps processes. -

Full SLA observability

Visualize risks and opportunities for improving your DataOps automations and aligning with customer expectations with the SLA dashboard. -

Repeatable DataOps practices

Reuse workflow design components and encourage self-service automation to streamline how you manage data processes.

Enable smooth workflow management

Create flexible workflow plans using dynamic, rule-based scheduling built on a centralized engine that eliminates the downsides of daily scheduling and delivers high job throughput. No matter your preferred data model, the right data orchestration solution will support the scalability of your DataOps initiatives.

RunMyJobs supports workflow health by optimizing performance and load with dynamic workload balancing.

Unify diverse DataOps platforms

Integrating your workload automation solution directly with ERPs, CRMs, financial tools, databases, big data platforms and ETL and BI solutions allows you to lay the foundation of a strong data architecture.

RunMyJobs has out-of-the-box integrations for a wide range of technologies that interact in critical stages of your data pipelines, including:

- Data storage and processing tools

- Data integration and transformation solutions

- Data warehousing and analytics platforms

- Business solutions

If you prefer, integrate via APIs by building your own connectors using the Connector Wizard.

Align data operations with business SLAs

Most data orchestration processes are time-critical. Especially if you need to integrate and analyze data to drive business processes based on sales and customer data, you need to have full data observability.

RunMyJobs makes it simple to identify risks to your workflows and how to remediate problems. Configure SLAs with custom rules and thresholds and set up escalations and alerts to ensure the right people are informed if critical deadlines are predicted to slip. Use dashboard views and controls to track and monitor automations in real time, and rely on machine learning-driven predictive analytics to maintain SLA performance.

Deliver smarter IT service management

RunMyJobs has the capabilities and features to automate any data process, from data hygiene to validation to modeling. It’s particularly transformational for IT service management (ITSM), allowing you to:

- Build jobs to use existing scripts or run commands on remote systems.

- Collect data from APIs.

- Manage problems with bi-directional ServiceNow integration.

- Automate decommissioning and data archiving.

Integrate seamlessly with ServiceNow

Offer agility to your IT team members by supporting large and complex automation chains across multiple processes and applications. Automate IT system maintenance and create and assign incidents in ServiceNow with predefined process templates.

Use this bi-directional integration to fetch ServiceNow incident information and automate creation or updates in RunMyJobs or respond with RunMyJobs’ built-in messaging tool.

Related resources

Learn more about using RunMyJobs to orchestrate complex processes and optimize your data operations.

Big data FAQs

What is the difference between DevOps and DataOps?

DevOps and DataOps share similar principles, but DevOps focuses on optimizing software delivery, while DataOps centers around optimizing the delivery of data.

DevOps is a set of practices aimed at automating and streamlining the processes between software development (Dev) and IT operations (Ops). The primary goal of DevOps is to shorten the software development lifecycle while ensuring the quality and reliability of software through continuous integration and delivery (CI/CD). DevOps focuses on collaboration, automation and integration between development and operations teams to quickly and reliably deliver software.

DataOps, on the other hand, is a methodology that applies DevOps-like practices to the end-to-end data lifecycle. It focuses on improving the communication, integration and automation of data flows between data engineers, data scientists and IT operations to enable faster, more reliable delivery of data for analysis. The goal of DataOps is to enhance the efficiency and quality of data management and analytics by automating data pipelines and ensuring that data is easily accessible, reliable and secure.

Learn more about DevOps Workflow Automation and the top best practices your team should consider.

What is DataOps?

DataOps is a data management methodology that aims to improve data pipelines by changing how data engineers, data scientists and other data professionals automate and communicate about them. It applies the principles of DevOps, lean manufacturing and agile practices to the field of data analytics, with the goal of delivering data-driven insights faster and more reliably.

DataOps is focused on the entire data lifecycle, from data ingestion to data transformation, analysis and output. It seeks to break down silos between data teams and ensure that data is handled in a consistent, automated and error-free manner. DataOps emphasizes the use of automation tools and collaboration to improve the quality and speed of data delivery, allowing organizations to derive insights and make decisions more efficiently.

In a DataOps environment, automation and continuous integration are key aspects of managing and monitoring data pipelines, ensuring that data is always available, up-to-date and accurate.

Learn more about data management and how to take your data from chaos to clarity.

What is data orchestration?

Data orchestration refers to the automated coordination and management of data flows across multiple systems and processes. It ensures that data is ingested, processed, transformed and delivered to the right destination in a timely and efficient manner. The main objective of data orchestration is to create a seamless flow of data from various sources, enabling data teams to efficiently manage and manipulate data without manual intervention.

In a data orchestration system, the various stages of the data lifecycle — from data collection and ingestion to processing, storage and analysis — are automated and monitored. Orchestration tools handle the scheduling and execution of tasks, ensuring that data pipelines run smoothly and that dependencies between tasks are properly managed.

In a typical data orchestration scenario, data is ingested from various sources (e.g., databases, cloud storage, APIs), then it’s cleaned, transformed or enriched based on business logic. Finally, it’s sent to storage or analytical tools for reporting and visualization.

Learn more about job orchestration and how it can be used for data management automation.

What are the main functions of DataOps?

The main functions of DataOps include:

- Automation of data pipelines: DataOps emphasizes automating the various stages of data pipelines, reducing the manual effort required for these processes and ensuring that data flows are consistent, repeatable and scalable.

- Continuous Integration and Delivery (CI/CD): Just like in DevOps, DataOps incorporates CI/CD practices for data workflows. This means that changes in data sources, pipeline configurations or analysis models are automatically tested, validated and deployed into production environments without disruption.

- Data monitoring and quality assurance: Ensuring data quality is one of the core functions of DataOps. Using automated testing, monitoring and validation tools, DataOps teams can catch issues early in the data pipeline, such as corruption, missing data or performance bottlenecks. This helps maintain the accuracy and reliability of data for business insights.

- Version control: Similar to how software versioning is handled in DevOps, DataOps employs version control for data pipelines and datasets. This allows teams to track changes, roll back to previous versions if necessary and maintain accountability throughout the data lifecycle.

- Data governance and security: DataOps ensures that data governance and security protocols are integrated into every stage of the data pipeline. This includes managing data access controls, compliance with regulations and securing sensitive data as it moves through the pipeline.

- Real-time data processing: DataOps enables real-time or near-real-time data processing and analytics, allowing organizations to make faster, more informed decisions based on up-to-date data.

Take a deeper dive into data pipelines and the 6 benefits of data pipeline automation.