How ChatGPT is improving IT and business processes

Machine learning, artificial intelligence, foundation models, large language models (LLM), generative AI, general AI — many of these terms are becoming part of our modern vernacular.

While we’re focusing on these concepts in business and reading about them in the news, many of us are still looking to the future — for a revolutionary moment to come along in AI or for it to get just that little bit better.

The latest advances in AI and machine learning mean we have many opportunities now to improve work and play. Specifically, reducing the resource burden of low-value or time-consuming tasks and enriching processes with natural language analysis and content. There are readily accessible benefits that organizations in various industries have yet to realize.

Machine learning vs. AI

Machine learning (ML) is sometimes seen as a precursor to AI, but it’s still part of the whole AI picture and is highly relevant today. Though largely unseen by end users, ML is built into many software products we already use.

Using ML to train models to recognize patterns and anomalies is the most common use case today. In automation, this surfaces in the infrastructure needed for large-scale training. Services such as AWS Batch provide easy ways for AI developers to train models.

The search for more generalized forms of ML models brought us to the current phase of AI development.

Where AI is now

Generative AI and large language models (LLMs), such as OpenAI’s ChatGPT, offer a human-friendly way of interacting with the most recent models. While this makes them feel much closer to the “real” AI we imagine, in most cases, the capabilities we can reliably and confidently use are relatively narrow.

As part of a workflow, these pre-baked models can quickly summarize information and bridge the gap between workflow and employee. The information you ask a model to summarize could be about the workflow itself or the process the workflow is automating.

Remember, to get a desired and consistent output, we need to be specific in our prompts.

Foundation models and “AI PaaS” services are pre-trained and often tuned for a specific purpose. Businesses looking to use AI models need to train them with data from business processes. Examples are Amazon Q and Amazon Bedrock.

Solution enhancements to technology using AI models are common, but with the new wave of AI technologies, we can expect many solutions to provide a more human interface for accessing knowledge and information.

AI workflow automation potential

End-to-end process automation depends on an integrated framework that seamlessly connects automation tools, processes and data sources—an automation fabric. AI complements automation fabrics in the form of built-in features or connectors that facilitate greater process efficiency and accelerate business outcomes.

Putting the more novel or complicated advancements aside for now, let’s dig a bit more into how implementing AI-powered workflow automation can bring the benefits of AI and LLMs to your routine tasks.

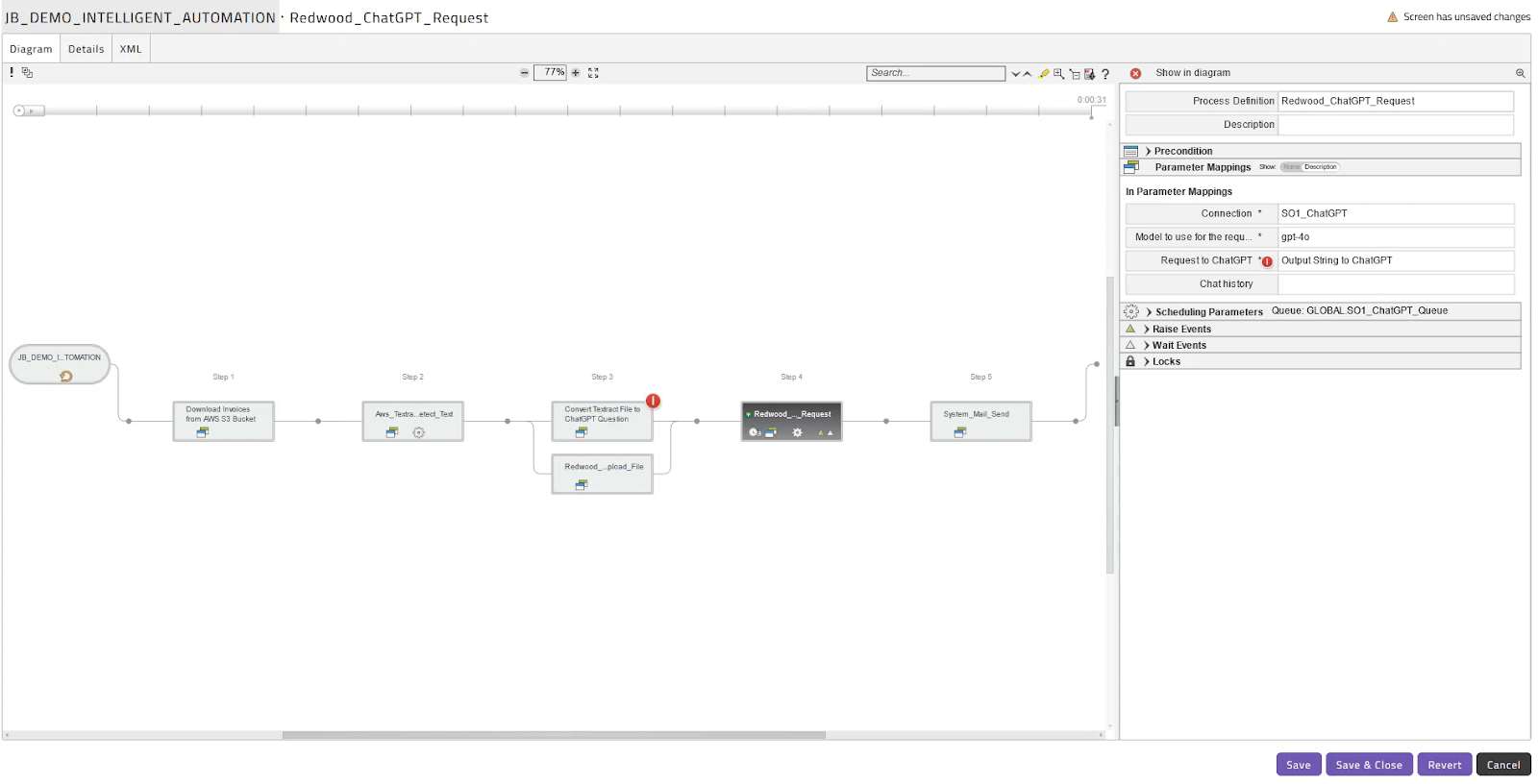

What’s possible with the ChatGPT connector for RunMyJobs

We’ve built a ChatGPT integration for our workload automation solution, RunMyJobs by Redwood, so AI can further the platform’s value of unleashing human potential. For many uses, ChatGPT exists alongside workflow steps as a supplement or a way to interface with users. In some cases, it can replace existing steps or manual tasks users may do later.

Using the ChatGPT connector and job template, adding a prompt with information from a workflow is simple and works like any other step in a chain.

As with the user interface for ChatGPT, you can send data as a chat via API. The connector enables you to configure the prompt you’re sending and use that in your workflow. Effectively, you’re sending ChatGPT a question and getting a response and can optionally maintain the history for a contextual conversation. You can extend this functionality and pull information in from any source using other connectors and scripts.

Let’s talk about how organizations are expanding the power of RunMyJobs with ChatGPT.

Crafting emails

Emails and other writing needs are some of the most common reasons people currently use tools like ChatGPT. Given the immense library of previously entered content the app can draw from, it does an excellent job.

In an automation context, you’d be likely to send an email when a job is starting or has been completed. The email might simply notify, or we might embed some data, times or other information. The problem is that original formatting may break down, the data can be missing or changed in a way that affects the legibility of your email.

In RunMyJobs, you can collate a set of information using workflow parameters and other data, send that to ChatGPT and ask for an email summary, and then use the output to craft your email. You could store the data in a RunMyJobs — in a data table or parameter — or in a separate file the workflow can access.

Alternatively, you could maintain a conversation with ChatGPT: Each time a workflow progresses, you’d send a new piece of information, the time the given step was completed and the outputs to ChatGPT. At the end of the process or upon an error, it can provide a summary of events so far.

You could also send ChatGPT other information: Structured data like CSV lists or unstructured data like emails that the workflow handles as part of its processes, asking for summaries or specific queries about the data to send to users in emails. See more examples in the upcoming sections.

⚠️ Although it’s interesting to have a conversation with the AI chatbot throughout the workflow, and it can be used for ongoing enhancements, I have to point out that the latter method could be quite expensive in terms of API calls.

Quick translations and extracting text

In many use cases, you might be handling documents, emails or other data that’s in a language other than your main business language or is unstructured in nature.

Here, it’s a good idea to think about the criticality of the translation or extraction. The benefit of using ChatGPT or another general model to do this is you can instruct it generally on what to do. The downside is that leaves some room for a variable, or changing, response.

When dealing with emails or other forms of non-critical communication, we could use ChatGPT to make a quick translation pass to help any users who need to assess the data later.

Or you might need to handle a dataset with comments in a different language; you could pass comments selectively to ChatGPT for translation.

We could also ask ChatGPT to extract specific portions of text, perhaps looking for countries, place names or other recognizable information to include in a summary report or an email.

⚠️ It’s worth remembering that while ChatGPT is capable of producing translations, the model hasn’t been fine-tuned specifically for translation tasks. For critical or professional translation needs, it’s generally recommended to use dedicated machine translation models or services designed explicitly for translation.

Interpreting and summarizing data

Data analysis is a huge undertaking, so it’s particularly valuable to acquire a quick summary or identify something specific from a given dataset. Sending a question to ChatGPT could be the answer. But to do so efficiently, it’s key to learn proper prompt engineering and balance specific instructions with simple language.

Prompt engineering tips

- Send a question and get back an answer, which you can then store against the record or use in communications like email. A command like “Assess the tone of this email in one word” could return a nice indication of an email’s priority, at least in the eyes of the sender. In contrast, “Assess the tone of this email as either Polite, Neutral, Annoyed or Angry” would give us a more consistent way to measure responses.

- Use specific questions to reduce back-and-forth. “What language is this text in?” could generate some useful information, but you could improve the prompt by asking: “What is the ISO 639 language code of this text?”

- Direct the AI to help you make a decision. For example, prompting ChatGPT with “Please respond with True if any of the rows in this CSV contain the term ‘outstanding invoice.’”

- Experiment with a persona frame of reference. Try saying something like: “You are a finance operations manager” before asking for a data summary or piece of content.

- Always test your outputs. Use data that’s close to real-world and run the job through a test workflow until you’re satisfied the results are repeatable.

Reference this guide from Digital Ocean to explore more prompt engineering best practices.

A note on data security

To secure your business data while using the ChatGPT connector for RunMyJobs, you should use your own instance of ChatGPT through ChatGPT Team or ChatGPT Enterprise. This will mean your data is kept separate, though still sent to the OpenAI cloud platform.

Your organization may have policies and processes in place to remove or mask personally identifiable information (PII), but even with some data removed or anonymized, you can still ask useful questions to make decisions or share information with other people.

In a workflow handling invoice or sales data, you might anonymize the data and send a list to ChatGPT and be able to ask some specific questions — like our earlier example to look for outstanding invoices. Or, you could ask it to produce summaries of the data to push quick insights to other teams via email rather than them needing to access reports when they have time.

At the most simple level, we could ask for “a short summary of this invoice data” and receive an output similar to what’s shown below to use in an email.

Key metrics:

- Total amount invoiced: $365,336.07

- Total amount paid: $255,329.95

- Total outstanding amount: $110,006.12

Observations:

- Highest total amount invoiced: Customer 2 with $153,682.16.

- Highest total amount paid: Customer 2 with $103,026.03.

- Highest outstanding amount: Customer 2 with $50,656.13.

- Lowest total amount invoiced: Customer 4 with $30,870.14.

- Lowest outstanding amount: Customer 4 with $5,071.98.

Generative AI for more efficient orchestration

Even if AI is not quite yet an omnipotent being, you can start weaving ChatGPT and other AI-powered tools into your workflows to enrich and streamline processes, save time, give your team members additional insights and speed up decision-making.

If your organization is already using generative AI to a significant degree, there may be more integrated ways to enhance your workflows. A model that understands more about your business could answer specific and unique questions to help you achieve intelligent process automation.

And remember, the ChatGPT integration is just one way to incorporate AI into your workload automation with RunMyJobs and a familiar user experience.

Connecting to other AI systems via REST API is easy with the Connector Wizard.

About The Author

Dan Pitman

Dan Pitman is a Senior Product Marketing Manager for RunMyJobs by Redwood. His 25-year technology career has spanned roles in development, service delivery, enterprise architecture and data center and cloud management. Today, Dan focuses his expertise and experience on enabling Redwood’s teams and customers to understand how organizations can get the most from their technology investments.